INCREASING TERMINAL EFFICIENCY THROUGH AI

Optical Character Recognition (OCR) and Optical Feature Recognition (OFR) are typical AI vision solutions used in container terminal gate and crane operations, providing input data to the Gate Operation System (GOS) and Terminal Operation System (TOS). Keeping track of trucks, containers or trailers in a terminal is key for terminal operations. Accuracy of this data is a major concern, since automatically captured data triggers a series of processes in the terminal. Subsequent actions based on incorrect data require many resources for correction, impacting operations, efficiency and ultimately the terminal bottom line.

PIONEERING IN COMPUTER VISION

Camco is renowned for its highly performing OCR/OFR engines as well as for its automation solutions serving many terminals across the globe. For 20 years, Camco software developers have acquired substantial know-how in image analysis by staying on top of the latest technology trends: from Principal Components Analysis (PCA) for optical character recognition over Histogram of Oriented Gradients (HOG) for object detection to Convolutional Neural Networks (CNN) for achieving state-of-the-art analysis based on big datasets and requiring considerable computing power. Today, machine learning and deep learning enable the development of powerful vision-based applications and increase the accuracy and recognition rates of our camera systems. Camco’s proprietary OCR/OFR solutions are acknowledged as the visual identification market reference.

DEEP LEARNING BASED IMAGE ANALYSIS

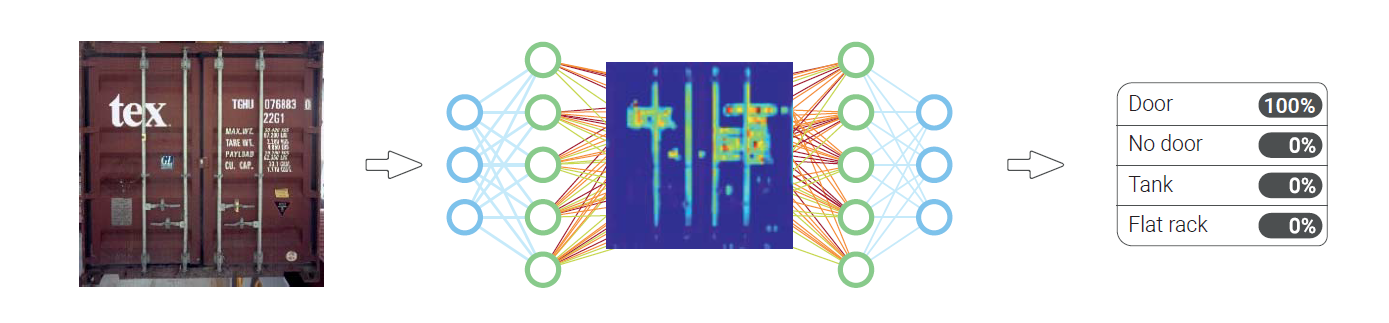

Deep learning is one of many, but perhaps the most popular domain within Machine Learning (ML) and includes several technologies. When working mostly with images, Convolutional Neural Networks (CNN) is one of those groundbreaking technologies. Their main use is to classify, detect or segment objects in an image.

A CNN typically consists of an input layer, a series of hidden layers and an output or prediction layer. The hidden convolutional layers process image input similar to how our brain’s primary visual cortex processes signals from the optic nerves. The (artificial) neurons in each layer are trained to respond to specific stimuli or features in the image: at first low-level features such as lines and colors, but increasingly more complex features, e.g., silhouettes and object parts, as signals are further propagated through the network. All this information combined is then processed into a final prediction: a number of class labels / bounding boxes and a probability for each. (Check the cs.ryerson.ca website for a simplified visualisation of the CNN process)

Analogy between the human brain image analysis processes and Convolutional Neural Networks used in Deep Learning image analysis

LEARNING BY EXPERIENCE

Just like the human brain, CNN learns by experience. When provided with a large number of labeled images – the training set – the network learns what features a specific object is comprised of. What has actually been learned are the values of millions of weights and biases forming connections of a multi-layered network. Feature extraction in each consecutive layer based on these learned weights ultimately results in a practical output: an object’s class and its location.

Note that there is still a lot of manual involvement by AI developers in designing the architecture, training, evaluating and deploying the models. Also increasing system performance afterwards is achieved by implementing operator assessments and insight into the software and algorithms: adding layers to the initial network architecture for example or re-training the model on an extended data set with samples that got mistakenly recognized before.

Every OCR/OFR application requires a separate CNN algorithm.

SETTING UP THE APPLICATION NETWORK ARCHITECTURE

Training a CNN requires a large training set and coming up with the best suited network architecture, i.e., a definition of layers and operations, can be very time-consuming. Sometimes it’s more beneficial to build on state-of-the-art networks released by various research groups and tweak them to our advantage.

Camco’s AI strength further lies in analyzing the circumstances for each problem, gathering high quality datasets and optimizing models and training procedures within time and memory constraints. Fine-tuning methods and models is an iterative process as data keeps flowing in and new deep learning discoveries are made.

AI & DEEP LEARNING COMPETENCE

Deep learning is a powerful tool driving AI, but it is not a standalone solution capable of tackling all the problems. Sometimes, the lack of samples or the complex and unpredictable nature of a problem requires a combination of technologies and algorithms. That is where Camco’s experience in many other AI domains makes the difference.

HARDWARE: DEEP LEARNING PURPOSE BUILT CAMERAS

Deep learning relies on complicated algorithmic models that perform an enormous amount of matrix operations. The hardware and the software need to work in perfect harmony. Camco puts a lot of effort and investment into making the best pictures in the market. Developing its own cameras, often used in very harsh conditions, offers the flexibility to improving the image quality.

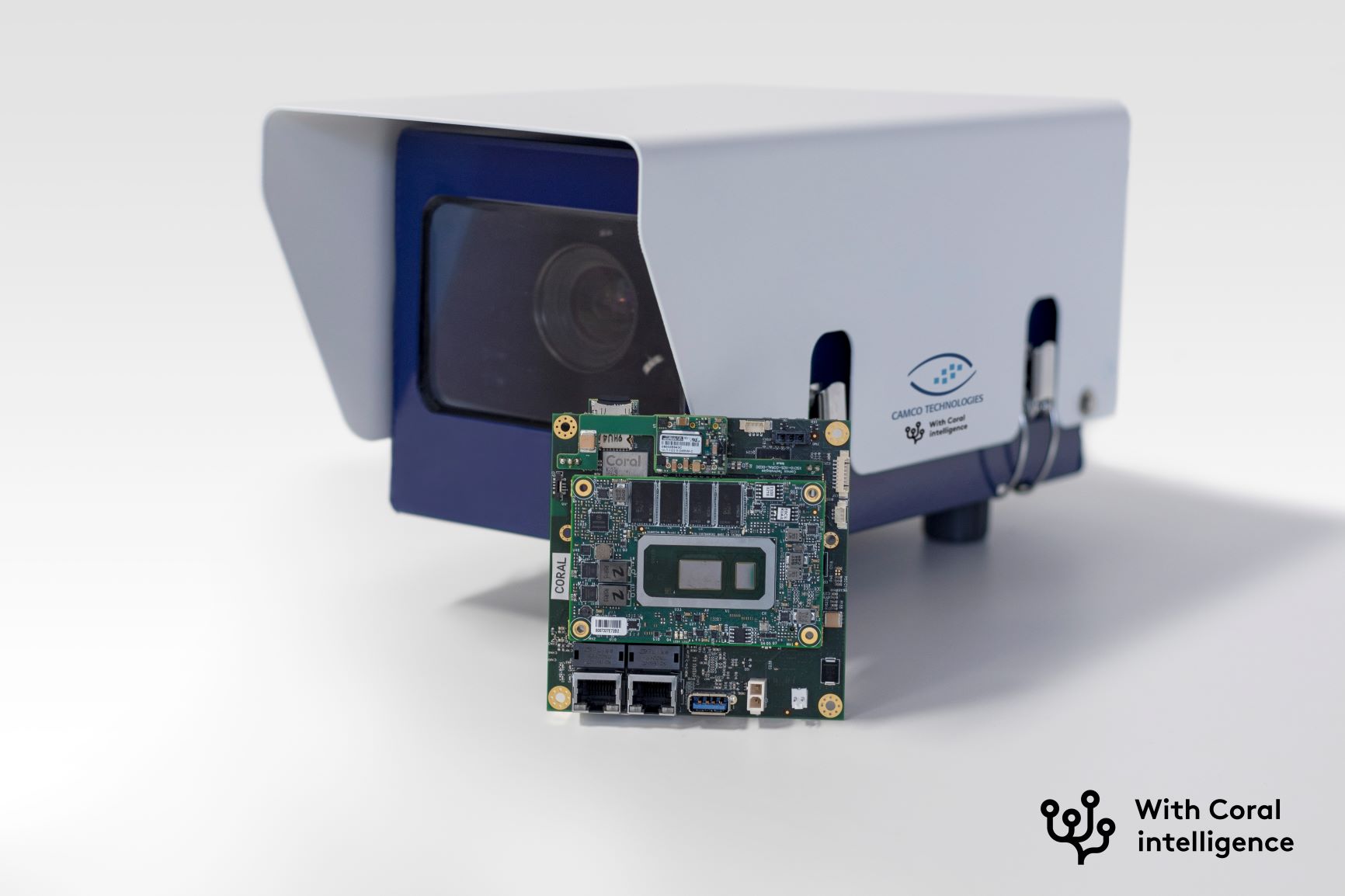

Deep learning requires a lot of parallel computing power. Still, Camco advocates local processing, since moving images across networks is very time consuming and creates many dependencies with respect to timing and reliability. The latest generation Camco intelligent cameras have fast Intel i3 processors embedded, offering adequate performance (within seconds) for most of our deep learning applications. Some applications, such as streaming LPR, require faster processing.

That is why Camco also integrate Jetson TX2 NVIDIA or Coral Accelerator Module featuring the Google Edge TPU inside its new series of intelligent cameras. With these modules, we are able to run our software on a GPU, allowing the use of deeper, more complex and better performing networks. The hardware innovations contribute to better images and more embedded computing power, accuracy, speed and ultimately, AI performance.

Coral Accelerator Module featuring the Google Edge TPU integrated in Camco’s latest OCR camera systems.

CAPTURING IN- AND OUTBOUND CONTAINERS

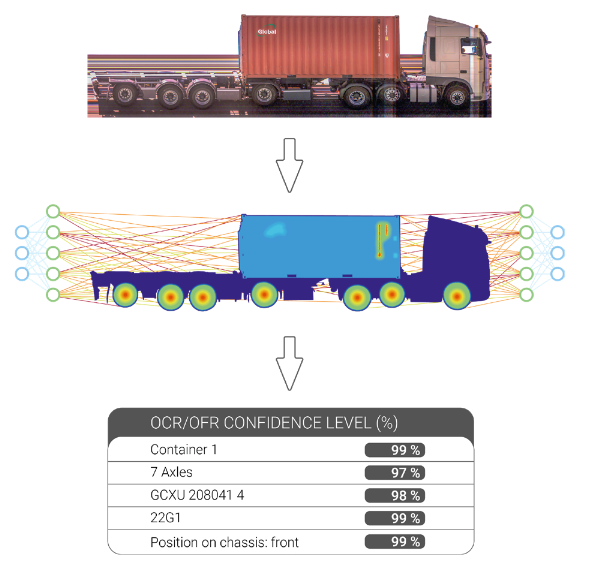

Truck and container feature registration are probably the most added-value AI applications in a container terminal environment. The set of required features to be registered depends on the terminal process and determines the dataset and sometimes the architecture of the network. Some features imply OCR reading of for example the container number and ISO code. Other applications require detection of physical features (OFR) like the presence of seals and dangerous goods labels or cargo classification. Automated damage inspection is another application for checking container condition at pick-up or drop-off.

Camco Optical Character Recognition (OCR) and Optical Feature Recognition (OFR) applications for container, trailer and truck.

IMAGE READINGS AND CONFIDENCE LEVELS

Each OCR/OFR engine generates a feature result set including corresponding confidence levels. The confidence level is used to decide if the reading is trustworthy or if it needs to be operator verified. It is influenced by quality or wear of container markings, light conditions etc. It is obvious that increasing the training set and continuously fine-tuning the algorithms will contribute to the performance and confidence levels of the visual recognition application. Collecting high-quality datasets and optimizing both the data, the models and the training procedures are complex processes, requiring expertise and resources.

Camco OCR/OFR confidence levels for container presence identification, axle count, container number and ISO, and container location on chassis for a non-disclosed project.

OVERCOMING INCOMPLETE INFORMATION

Camco feeds their networks with lots of high-quality images and annotations (e.g., objects of interest contained in an image and their location within the image). Our extensive and augmented datasets – containing images from all over the world – are the basis of our generic CNN models that produce better recognition rates, lowering the number of exception jobs. Following examples illustrate the added value of Camco’s AI and deep learning OCR/OFR technology:

Partly occluded characters or symbols (1), dirty and damaged characters; Mixed font sizes and font colors (2); puzzle characters (3), shadows; damage (4).

Well-trained CNN models are able to generalize and fill in the gaps when characters are damaged or slightly different from the samples used for training.

SIGNIFICANTLY IMPROVING RESULTS WITH AI AND CNN

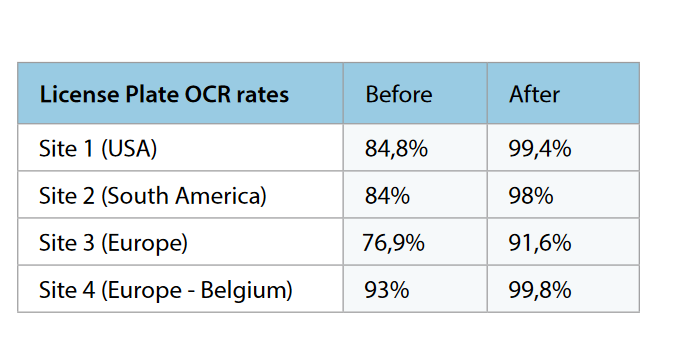

When applied to license plate recognition OCR solutions, performance difference between traditional and CNN-based OCR technology becomes very clear: in recent projects Camco AI software allowed improving License Plate Reading accuracy rates from 7 up to 15%, achieving 99,8 LPR accuracy in one particular European project.

Significant improvement of OCR hit rates for License Plate Reading with Camco Truck OCR Portal.